nacos集群部署-k8s环境

通过StatefulSets 在Kubernetes上部署Nacos

创新互联公司于2013年开始,是专业互联网技术服务公司,拥有项目成都网站设计、成都做网站、外贸网站建设网站策划,项目实施与项目整合能力。我们以让每一个梦想脱颖而出为使命,1280元东台做网站,已为上家服务,为东台各地企业和个人服务,联系电话:028-86922220

快速部署可以参考官网https://nacos.io/en-us/docs/use-nacos-with-kubernetes.html

1 快速部署

git clone https://github.com/nacos-group/nacos-k8s.git cd nacos-k8s chmod +x quick-startup.sh ./quick-startup.sh

1.2服务测试

服务注册 curl -X PUT 'http://cluster-ip:8848/nacos/v1/ns/instance?serviceName=nacos.naming.serviceName&ip=20.18.7.10&port=8080' 服务发现 curl -X GET 'http://cluster-ip:8848/nacos/v1/ns/instances?serviceName=nacos.naming.serviceName' 发布配置 curl -X POST "http://cluster-ip:8848/nacos/v1/cs/configs?dataId=nacos.cfg.dataId&group=test&content=helloWorld" 获取配置 curl -X GET "http://cluster-ip:8848/nacos/v1/cs/configs?dataId=nacos.cfg.dataId&group=test"

2 NFS方式部署。

NFS的使用是为了保留数据,数据库的数据以及nacos的数据日志等。

该方式部署需要对官方的yaml进行修改,下面列出实测可用的步骤及yaml文件

2.1部署NFS服务环境

找一台可以与k8s环境通信的内网机器192.168.1.10,在机器上部署nfs服务,选择合适磁盘,作为共享目录。

yum install -y nfs-utils rpcbind mkdir -p /data/nfs mkdir -p /data/MySQL_master mkdir -p /data/mysql_slave vim /etc/exports /data/nfs *(insecure,rw,async,no_root_squash) /data/mysql_slave *(insecure,rw,async,no_root_squash) /data/mysql_master *(insecure,rw,async,no_root_squash) systemctl start rpcbind systemctl start nfs systemctl enable rpcbind systemctl enable nfs-server exportfs -a showmount -e

2.2 k8s上部署nfs

cd nacos-k8s/deploy/nfs/ [root@localhost nfs]# ll 总用量 12 -rw-r--r--. 1 root root 153 10月 15 08:05 class.yaml -rw-r--r--. 1 root root 877 10月 15 14:37 deployment.yaml -rw-r--r--. 1 root root 1508 10月 15 08:05 rbac.yaml

2.2.1 创建rbac,使用默认的rbac.yaml 不用修改,采用的是default命名空间,如果需要部署到特定的命名空间,则修改其中的namespace。

kubectl create -f rbac.yaml

kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner

2.2.2 创建ServiceAccount和部署NFS-Client Provisioner

kubectl create -f deployment.yaml ##修改ip和目录

apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner --- kind: Deployment apiVersion: extensions/v1beta1 metadata: name: nfs-client-provisioner spec: replicas: 1 strategy: type: Recreate template: metadata: labels: app: nfs-client-provisioner spec: serviceAccount: nfs-client-provisioner containers: - name: nfs-client-provisioner image: quay.io/external_storage/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs - name: NFS_SERVER value: 192.168.1.10 - name: NFS_PATH value: /data/nfs volumes: - name: nfs-client-root nfs: server: 192.168.1.10 path: /data/nfs

2.2.3 创建NFS StorageClass

kubectl create -f class.yaml ##无需修改yaml

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: fuseim.pri/ifs parameters: archiveOnDelete: "false"

2.3 部署数据库

cd nacos-k8s/deploy/mysql/

2.3.1部署主数据库

kubectl create -f mysql-master-nfs.yaml ##需要修改的是nfs的IP和目录

apiVersion: v1 kind: ReplicationController metadata: name: mysql-master labels: name: mysql-master spec: replicas: 1 selector: name: mysql-master template: metadata: labels: name: mysql-master spec: containers: - name: master image: nacos/nacos-mysql-master:latest ports: - containerPort: 3306 volumeMounts: - name: mysql-master-data mountPath: /var/lib/mysql env: - name: MYSQL_ROOT_PASSWORD value: "root" - name: MYSQL_DATABASE value: "nacos_devtest" - name: MYSQL_USER value: "nacos" - name: MYSQL_PASSWORD value: "nacos" - name: MYSQL_REPLICATION_USER value: 'nacos_ru' - name: MYSQL_REPLICATION_PASSWORD value: 'nacos_ru' volumes: - name: mysql-master-data nfs: server: 192.168.1.10 path: /data/mysql_master --- apiVersion: v1 kind: Service metadata: name: mysql-master labels: name: mysql-master spec: ports: - port: 3306 targetPort: 3306 selector: name: mysql-master

2.3.2 部署从数据库

kubectl create -f mysql-slave-nfs.yaml

apiVersion: v1 kind: ReplicationController metadata: name: mysql-slave labels: name: mysql-slave spec: replicas: 1 selector: name: mysql-slave template: metadata: labels: name: mysql-slave spec: containers: - name: slave image: nacos/nacos-mysql-slave:latest ports: - containerPort: 3306 volumeMounts: - name: mysql-slave-data mountPath: /var/lib/mysql env: - name: MYSQL_ROOT_PASSWORD value: "root" - name: MYSQL_REPLICATION_USER value: 'nacos_ru' - name: MYSQL_REPLICATION_PASSWORD value: 'nacos_ru' volumes: - name: mysql-slave-data nfs: server: 192.168.1.10 path: /data/mysql_slave --- apiVersion: v1 kind: Service metadata: name: mysql-slave labels: name: mysql-slave spec: ports: - port: 3306 targetPort: 3306 selector: name: mysql-slave

2.4 部署nacos

cd nacos-k8s/deploy/nacos/

kubectl create -f nacos-pvc-nfs.yaml ##该文件需要大修改,主要是改成基于quickstart版本增加挂载,其他无关的内容清理掉,具体如下

注意- name: NACOS_SERVERS这一项,创建时候域名是会自动生成一个这样的cluster.local,nacos-0.nacos-headless.default.svc.cluster.local:8848,我的k8s默认集群名设置成了cluster.test。所以文件中修改成这样的nacos-0.nacos-headless.default.svc.cluster.test:8848。

--- apiVersion: v1 kind: Service metadata: name: nacos-headless labels: app: nacos-headless spec: ports: - port: 8848 name: server targetPort: 8848 selector: app: nacos --- apiVersion: v1 kind: ConfigMap metadata: name: nacos-cm data: mysql.master.db.name: "nacos_devtest" mysql.master.port: "3306" mysql.slave.port: "3306" mysql.master.user: "nacos" mysql.master.password: "nacos" --- apiVersion: apps/v1 kind: StatefulSet metadata: name: nacos spec: serviceName: nacos-headless replicas: 3 template: metadata: labels: app: nacos annotations: pod.alpha.kubernetes.io/initialized: "true" spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: "app" operator: In values: - nacos-headless topologyKey: "kubernetes.io/hostname" containers: - name: k8snacos imagePullPolicy: Always image: nacos/nacos-server:latest resources: requests: memory: "2Gi" cpu: "500m" ports: - containerPort: 8848 name: client env: - name: NACOS_REPLICAS value: "3" - name: SERVICE_NAME value: "nacos-headless" - name: POD_NAMESPACE valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.namespace - name: MYSQL_MASTER_SERVICE_DB_NAME valueFrom: configMapKeyRef: name: nacos-cm key: mysql.master.db.name - name: MYSQL_MASTER_SERVICE_PORT valueFrom: configMapKeyRef: name: nacos-cm key: mysql.master.port - name: MYSQL_SLAVE_SERVICE_PORT valueFrom: configMapKeyRef: name: nacos-cm key: mysql.slave.port - name: MYSQL_MASTER_SERVICE_USER valueFrom: configMapKeyRef: name: nacos-cm key: mysql.master.user - name: MYSQL_MASTER_SERVICE_PASSWORD valueFrom: configMapKeyRef: name: nacos-cm key: mysql.master.password - name: NACOS_SERVER_PORT value: "8848" - name: PREFER_HOST_MODE value: "hostname" - name: NACOS_SERVERS value: "nacos-0.nacos-headless.default.svc.cluster.test:8848 nacos-1.nacos-headless.default.svc.cluster.test:8848 nacos-2.nacos-headless.default.svc.cluster.test:8848" volumeMounts: - name: datadir mountPath: /home/nacos/data - name: logdir mountPath: /home/nacos/logs volumeClaimTemplates: - metadata: name: datadir annotations: volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" spec: accessModes: [ "ReadWriteMany" ] resources: requests: storage: 5Gi - metadata: name: logdir annotations: volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" spec: accessModes: [ "ReadWriteMany" ] resources: requests: storage: 5Gi selector: matchLabels: app: nacos

也可以使用这种原始的

--- apiVersion: v1 kind: Service metadata: name: nacos-headless labels: app: nacos annotations: service.alpha.kubernetes.io/tolerate-unready-endpoints: "true" spec: ports: - port: 8848 name: server targetPort: 8848 clusterIP: None selector: app: nacos --- apiVersion: v1 kind: ConfigMap metadata: name: nacos-cm data: mysql.master.db.name: "nacos_devtest" mysql.master.port: "3306" mysql.slave.port: "3306" mysql.master.user: "nacos" mysql.master.password: "nacos" --- apiVersion: apps/v1 kind: StatefulSet metadata: name: nacos spec: serviceName: nacos-headless replicas: 2 template: metadata: labels: app: nacos annotations: pod.alpha.kubernetes.io/initialized: "true" spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: "app" operator: In values: - nacos topologyKey: "kubernetes.io/hostname" serviceAccountName: nfs-client-provisioner initContainers: - name: peer-finder-plugin-install image: nacos/nacos-peer-finder-plugin:latest imagePullPolicy: Always volumeMounts: - mountPath: "/home/nacos/plugins/peer-finder" name: plugindir containers: - name: nacos imagePullPolicy: Always image: nacos/nacos-server:latest resources: requests: memory: "2Gi" cpu: "1000m" ports: - containerPort: 8848 name: client-port env: - name: NACOS_REPLICAS value: "3" - name: SERVICE_NAME value: "nacos-headless" - name: POD_NAMESPACE valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.namespace - name: MYSQL_MASTER_SERVICE_DB_NAME valueFrom: configMapKeyRef: name: nacos-cm key: mysql.master.db.name - name: MYSQL_MASTER_SERVICE_PORT valueFrom: configMapKeyRef: name: nacos-cm key: mysql.master.port - name: MYSQL_SLAVE_SERVICE_PORT valueFrom: configMapKeyRef: name: nacos-cm key: mysql.slave.port - name: MYSQL_MASTER_SERVICE_USER valueFrom: configMapKeyRef: name: nacos-cm key: mysql.master.user - name: MYSQL_MASTER_SERVICE_PASSWORD valueFrom: configMapKeyRef: name: nacos-cm key: mysql.master.password - name: NACOS_SERVER_PORT value: "8848" - name: PREFER_HOST_MODE value: "hostname" readinessProbe: httpGet: port: client-port path: /nacos/v1/console/health/readiness initialDelaySeconds: 60 timeoutSeconds: 3 livenessProbe: httpGet: port: client-port path: /nacos/v1/console/health/liveness initialDelaySeconds: 60 timeoutSeconds: 3 volumeMounts: - name: plugindir mountPath: /home/nacos/plugins/peer-finder - name: datadir mountPath: /home/nacos/data - name: logdir mountPath: /home/nacos/logs volumeClaimTemplates: - metadata: name: plugindir annotations: volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" spec: accessModes: [ "ReadWriteMany" ] resources: requests: storage: 5Gi - metadata: name: datadir annotations: volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" spec: accessModes: [ "ReadWriteMany" ] resources: requests: storage: 5Gi - metadata: name: logdir annotations: volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" spec: accessModes: [ "ReadWriteMany" ] resources: requests: storage: 5Gi selector: matchLabels: app: nacos

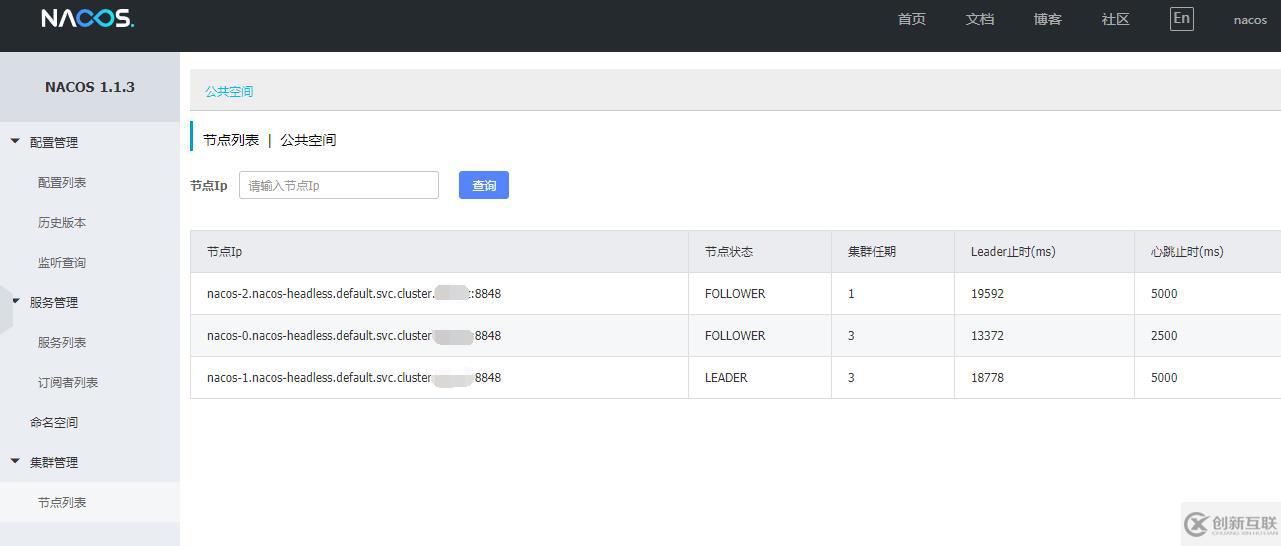

查看结果

[root@localhost nacos]# kubectl get pod NAME READY STATUS RESTARTS AGE mysql-master-hnnzq 1/1 Running 0 43h mysql-slave-jjq98 1/1 Running 0 43h nacos-0 1/1 Running 0 41h nacos-1 1/1 Running 0 41h nacos-2 1/1 Running 0 41h nfs-client-provisioner-57c8c85896-cpxtx 1/1 Running 0 45h [root@localhost nacos]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 172.21.0.1 <none> 443/TCP 9d mysql-master ClusterIP 172.21.12.11 <none> 3306/TCP 43h mysql-slave ClusterIP 172.21.1.9 <none> 3306/TCP 43h nacos-headless ClusterIP 172.21.11.220 <none> 8848/TCP 41h nginx-svc ClusterIP 172.21.1.104 <none> 10080/TCP 8d [root@localhost nacos]# kubectl get storageclass NAME PROVISIONER AGE alicloud-disk-available alicloud/disk 9d alicloud-disk-efficiency alicloud/disk 9d alicloud-disk-essd alicloud/disk 9d alicloud-disk-ssd alicloud/disk 9d managed-nfs-storage fuseim.pri/ifs 45h

[root@localhost nacos]# kubectl get pv,pvc NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-c920f9cf-f56f-11e9-90dc-da6119823c38 5Gi RWX Delete Bound default/datadir-nacos-0 managed-nfs-storage 43h persistentvolume/pvc-c921977d-f56f-11e9-90dc-da6119823c38 5Gi RWX Delete Bound default/logdir-nacos-0 managed-nfs-storage 43h persistentvolume/pvc-c922401f-f56f-11e9-90dc-da6119823c38 5Gi RWX Delete Bound default/plugindir-nacos-0 managed-nfs-storage 43h persistentvolume/pvc-db3ccda6-f56f-11e9-90dc-da6119823c38 5Gi RWX Delete Bound default/datadir-nacos-1 managed-nfs-storage 43h persistentvolume/pvc-db3dc25a-f56f-11e9-90dc-da6119823c38 5Gi RWX Delete Bound default/logdir-nacos-1 managed-nfs-storage 43h persistentvolume/pvc-db3eb86c-f56f-11e9-90dc-da6119823c38 5Gi RWX Delete Bound default/plugindir-nacos-1 managed-nfs-storage 43h persistentvolume/pvc-fa47ae6e-f57a-11e9-90dc-da6119823c38 5Gi RWX Delete Bound default/logdir-nacos-2 managed-nfs-storage 41h persistentvolume/pvc-fa489723-f57a-11e9-90dc-da6119823c38 5Gi RWX Delete Bound default/plugindir-nacos-2 managed-nfs-storage 41h persistentvolume/pvc-fa494137-f57a-11e9-90dc-da6119823c38 5Gi RWX Delete Bound default/datadir-nacos-2 managed-nfs-storage 41h NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/datadir-nacos-0 Bound pvc-c920f9cf-f56f-11e9-90dc-da6119823c38 5Gi RWX managed-nfs-storage 43h persistentvolumeclaim/datadir-nacos-1 Bound pvc-db3ccda6-f56f-11e9-90dc-da6119823c38 5Gi RWX managed-nfs-storage 43h persistentvolumeclaim/datadir-nacos-2 Bound pvc-fa494137-f57a-11e9-90dc-da6119823c38 5Gi RWX managed-nfs-storage 41h persistentvolumeclaim/logdir-nacos-0 Bound pvc-c921977d-f56f-11e9-90dc-da6119823c38 5Gi RWX managed-nfs-storage 43h persistentvolumeclaim/logdir-nacos-1 Bound pvc-db3dc25a-f56f-11e9-90dc-da6119823c38 5Gi RWX managed-nfs-storage 43h persistentvolumeclaim/logdir-nacos-2 Bound pvc-fa47ae6e-f57a-11e9-90dc-da6119823c38 5Gi RWX managed-nfs-storage 41h persistentvolumeclaim/plugindir-nacos-0 Bound pvc-c922401f-f56f-11e9-90dc-da6119823c38 5Gi RWX managed-nfs-storage 43h persistentvolumeclaim/plugindir-nacos-1 Bound pvc-db3eb86c-f56f-11e9-90dc-da6119823c38 5Gi RWX managed-nfs-storage 43h persistentvolumeclaim/plugindir-nacos-2 Bound pvc-fa489723-f57a-11e9-90dc-da6119823c38 5Gi RWX managed-nfs-storage 41h [root@localhost nacos]#

然后用ingress映射出去就可以访问8848端口

标题名称:nacos集群部署-k8s环境

文章路径:https://www.cdcxhl.com/article28/iidgcp.html

成都网站建设公司_创新互联,为您提供电子商务、网站建设、标签优化、ChatGPT、做网站、App开发

声明:本网站发布的内容(图片、视频和文字)以用户投稿、用户转载内容为主,如果涉及侵权请尽快告知,我们将会在第一时间删除。文章观点不代表本网站立场,如需处理请联系客服。电话:028-86922220;邮箱:631063699@qq.com。内容未经允许不得转载,或转载时需注明来源: 创新互联

- 做网站内链起什么作用? 2022-10-15

- 详谈网站内链构建六大方式小结 2022-08-19

- 企业网站内链优化的方法和注意事项? 2023-04-16

- 网站内链对我们网站有什么重要性 2016-10-10

- 网站内链绘出整体页面内容脉络图 2023-04-05

- 营销类网站内链怎么做优化 2022-11-14

- 菏泽信息发布打造公道的网站内链结构,才气提高搜索引擎的收录 2023-01-07

- 网站内链的重要性 2023-01-01

- 营销型网站内链该如何设置符合SEO优化? 2023-04-13

- 网站内链建设几个小技巧 2016-11-07

- seo教程,网站内链的作用是什么?内链结构该怎么布局? 2022-12-31

- 青岛网站建设做好网站内链SEO优化,真的可以晋升网站流量吗? 2023-01-11