SparkonYarn安装配置-创新互联

1、说明

这篇文章是在xxx基础上进行部署的,需要hadoop的相关配置和依赖等等,Spark on Yarn的模式,Spark安装配置好即可,在Yarn集群的所有节点安装并同步配置,在无需启动服务,没有master、slave之分,Spark提交任务给Yarn,由ResourceManager做任务调度。

2、安装

yum -y install spark-core spark-netlib spark-python

3、配置

vim /etc/spark/conf/spark-defaults.conf spark.eventLog.enabled false spark.executor.extraJavaOptions -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:MaxHeapFreeRatio=70 -XX:+CMSClassUnloadingEnabled spark.driver.extraJavaOptions -Dspark.driver.log.level=INFO -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:MaxHeapFreeRatio=70 -XX:+CMSClassUnloadingEnabled -XX:MaxPermSize=512M spark.master yarn ##指定spark的运行模式

PS:关于spark-env.sh的配置,因为我的hadoop集群是通过yum安装的,估使用默认配置就可以找到hadoop的相关配置和依赖,如果hadoop集群是二进制包安装需要修改相应的路径

4、测试

a、通过spark-shell 测试

[root@ip-10-10-103-144 conf]# cat test.txt

11

22

33

44

55

[root@ip-10-10-103-144 conf]# hadoop fs -put test.txt /tmp/

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

[roo[root@ip-10-10-103-246 conf]# spark-shell

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel).

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/lib/zookeeper/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/flume-ng/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/parquet/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/avro/avro-tools-1.7.6-cdh6.11.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 1.6.0

/_/

Using Scala version 2.10.5 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_121)

Type in expressions to have them evaluated.

Type :help for more information.

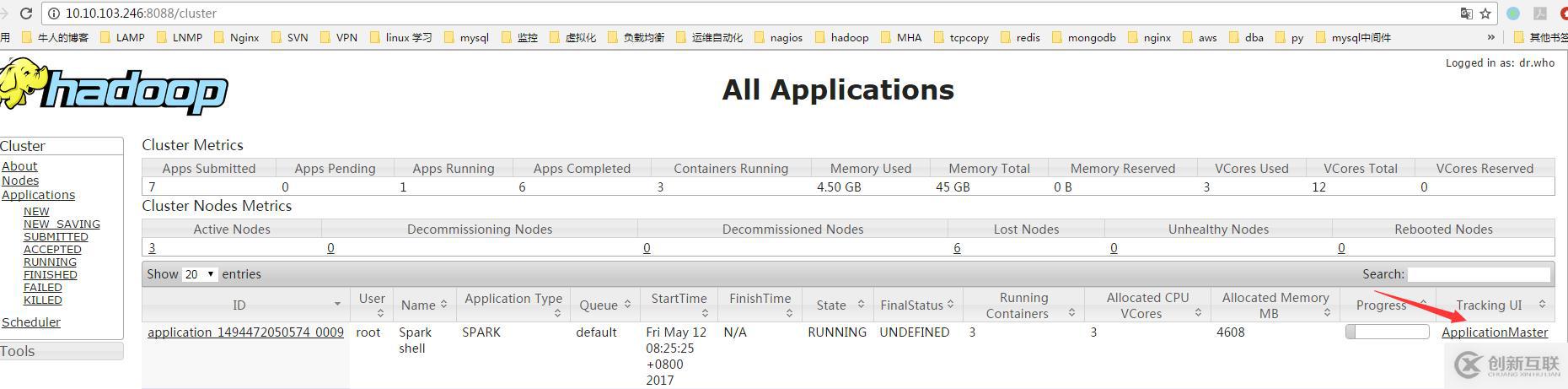

Spark context available as sc (master = yarn-client, app id = application_1494472050574_0009).

SQL context available as sqlContext.

scala> val file=sc.textFile("hdfs://mycluster:8020/tmp/test.txt")

file: org.apache.spark.rdd.RDD[String] = hdfs://mycluster:8020/tmp/test.txt MapPartitionsRDD[1] at textFile at <console>:27

scala> val count=file.flatMap(line=>line.split(" ")).map(test=>(test,1)).reduceByKey(_+_)

count: org.apache.spark.rdd.RDD[(String, Int)] = ShuffledRDD[4] at reduceByKey at <console>:29

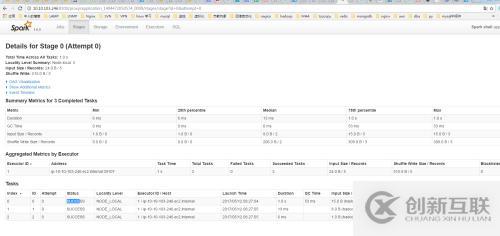

scala> count.collect()

res0: Array[(String, Int)] = Array((33,1), (55,1), (22,1), (44,1), (11,1))

scala>

b、通过run-example测试

[root@ip-10-10-103-246 conf]# /usr/lib/spark/bin/run-example SparkPi 2>&1 | grep "Pi is roughly" Pi is roughly 3.1432557162785812

5、遇到的问题

执行spark-shell计算报错如下:

scala> val count=file.flatMap(line=>line.split(" ")).map(word=>(word,1)).reduceByKey(_+_)

17/05/11 21:06:28 ERROR lzo.GPLNativeCodeLoader: Could not load native gpl library

java.lang.UnsatisfiedLinkError: no gplcompression in java.library.path

at java.lang.ClassLoader.loadLibrary(ClassLoader.java:1867)

at java.lang.Runtime.loadLibrary0(Runtime.java:870)

at java.lang.System.loadLibrary(System.java:1122)

at com.hadoop.compression.lzo.GPLNativeCodeLoader.<clinit>(GPLNativeCodeLoader.java:32)

at com.hadoop.compression.lzo.LzoCodec.<clinit>(LzoCodec.java:71)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at $line20.$read.<init>(<console>:48)

at $line20.$read$.<init>(<console>:52)

at $line20.$read$.<clinit>(<console>)

at $line20.$eval$.<init>(<console>:7)

at $line20.$eval$.<clinit>(<console>)

at $line20.$eval.$print(<console>)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.repl.SparkIMain$ReadEvalPrint.call(SparkIMain.scala:1045)

at org.apache.spark.repl.SparkIMain$Request.loadAndRun(SparkIMain.scala:1326)

at org.apache.spark.repl.SparkIMain.loadAndRunReq$1(SparkIMain.scala:821)

at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:852)

at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:800)

at org.apache.spark.repl.SparkILoop.reallyInterpret$1(SparkILoop.scala:857)

at org.apache.spark.repl.SparkILoop.interpretStartingWith(SparkILoop.scala:902)解决方案:

在spark-env.sh添加

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/lib/hadoop/lib/native/

让Spark能找到lzo的lib包即可。

另外有需要云服务器可以了解下创新互联scvps.cn,海内外云服务器15元起步,三天无理由+7*72小时售后在线,公司持有idc许可证,提供“云服务器、裸金属服务器、高防服务器、香港服务器、美国服务器、虚拟主机、免备案服务器”等云主机租用服务以及企业上云的综合解决方案,具有“安全稳定、简单易用、服务可用性高、性价比高”等特点与优势,专为企业上云打造定制,能够满足用户丰富、多元化的应用场景需求。

网站标题:SparkonYarn安装配置-创新互联

URL链接:https://www.cdcxhl.com/article2/pjgic.html

成都网站建设公司_创新互联,为您提供网站改版、微信小程序、网站维护、软件开发、网站设计公司、用户体验

声明:本网站发布的内容(图片、视频和文字)以用户投稿、用户转载内容为主,如果涉及侵权请尽快告知,我们将会在第一时间删除。文章观点不代表本网站立场,如需处理请联系客服。电话:028-86922220;邮箱:631063699@qq.com。内容未经允许不得转载,或转载时需注明来源: 创新互联

- 企业网站制作好后可以通过哪些渠道获取客户 2023-04-28

- 企业网站制作内部链接需要注意什么 2021-11-22

- 企业网站制作首页结构要如何做到友好,简洁 2021-09-29

- 企业网站制作方案要怎么写 2022-07-07

- 为什么建议企业网站制作去定做呢?这五大优势不容忽视! 2013-05-05

- 如何高效的完成企业网站制作 2022-06-30

- 企业网站制作有必要那么复杂吗 2022-05-27

- 企业网站制作让网站更好的引爆企业形象 2013-11-23

- 中小企业网站制作主要事项 2013-11-16

- 企业网站制作写好文案的7大要素 2021-08-21

- 企业网站制作需要几步? 2023-02-01

- 外贸营销网站建站,外贸企业网站制作要多少钱? 2022-05-02